Penetration Testing, Incident Response and Forensics

This course offers 4 modules…

This course offers 4 modules…

“Penetration testing is security testing in which assessors mimic real-world attacks to identify methods for circumventing the security features of an application, system, or network. It often involves launching real attacks on real systems and data that use tools and techniques commonly used by attackers.”

| Desktop | Mobile |

|---|---|

| Windows | iOS |

| Unix | Android |

| Linux | Blackberry OS |

| macOS | Windows Mobile |

| ChromeOS | WebOS |

| Ubuntu | Symbian OS |

Vulnerability scanning can help identify outdated software versions, missing patches, and misconfigurations, and validate compliance with or deviations from an organization’s security policy. This is done by identifying the OSes and major software applications running on the hosts and matching them with information on known vulnerabilities stored in the scanners’ vulnerability databases.

A Google Dork query, sometimes just referred to as a dork, is a search string that uses advanced search operators to find information that is not readily available on a website.

What Data Can We Find Using Google Dorks?

| Passive | Active |

|---|---|

| Monitoring employees | Network Mapping |

| Listening to network traffic | Port Scanning |

| Password cracking |

“Social Engineering is an attempt to trick someone into revealing information (e.g., a password) that can be used to attack systems or networks. It is used to test the human element and user awareness of security, and can reveal weaknesses in user behavior.”

“While vulnerability scanners check only for the possible existence of a vulnerability, the attack phase of a penetration test exploits the vulnerability to confirm its existence.”

“This section will communicate to the reader the specific goals of the Penetration Test and the high level findings of the testing exercise.”

Introduction

Personnel involved

Contact information

Assets involved in testing

Objectives of Test

Scope of test

Strength of test

Approach

Threat/Grading Structure

Scope

Information gathering

Passive intelligence

Active intelligence

Corporate intelligence

Personnel intelligence

Vulnerability Assessment In this section, a definition of the methods used to identify the vulnerability as well as the evidence/classification of the vulnerability should be present.

Vulnerability Confirmation This section should review, in detail, all the steps taken to confirm the defined vulnerability as well as the following:

Exploitation Timeline

Targets selected for Exploitation

Exploitation Activities

Post Exploitation

Escalation path

Acquisition of Critical Information

Value of information Access to core business systems

Access to compliance protected data sets

Additional information/systems accessed

Ability of persistence

Ability for exfiltration

Countermeasure

Effectiveness

Risk/Exposure This section will cover the business risk in the following subsection:

Evaluate incident frequency

Estimate loss magnitude per incident

Derive Risk

“Preventive activities based on the results of risk assessments can lower the number of incidents, but not all incidents can be prevented. An incident response is therefore necessary for rapidly detecting incidents, minimizing loss and destruction, mitigating the weaknesses that were exploited, and restoring IT services.”

“An event can be something as benign and unremarkable as typing on a keyboard or receiving an email.”

In some cases, if there is an Intrusion Detection System(IDS), the alert can be considered an event until validated as a threat.

“An incident is an event that negatively affects IT systems and impacts on the business. It’s an unplanned interruption or reduction in quality of an IT service.”

An event can lead to an incident, but not the other way around.

One of the benefit of having an incident response is that it supports responding to incidents systematically so that the appropriate actions are taken, it helps personnel to minimize loss or theft of information and disruption of services caused by incidents, and to use information gained during incident handling to better prepare for handling future incidents.

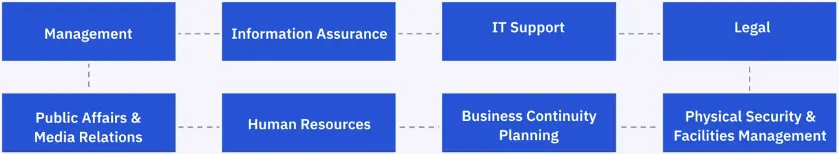

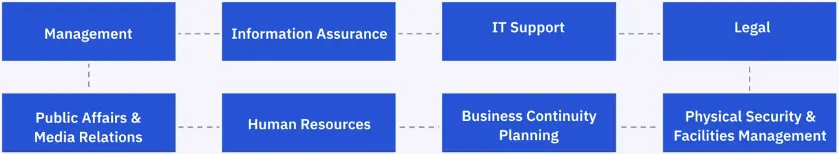

Incident don’t occur in a vacuum and can have an impact on multiple parts of a business. Establish relationships with the following teams:

Organization should be generally prepared to handle any incident, but should focus on being prepared to handle incident that use common attack vectors:

Knowing the answers to these will help your coordination with other teams and the media.

IR Policy needs to cover the following: IR Team

Incident Handler Communications and Facilities:

Contact information

On-call information

Incident reporting mechanisms

Issue tracking system

Smartphones

Encryption software

War room

Secure storage facility

Incident Analysis Hardware and Software:

Digital forensic workstations and/or backup devices

Laptops

Spare workstations, servers, and networking equipment

Blank removable media

Portable printer

Packet sniffers and protocol analyzers

Digital forensic software

Removable media

Evidence gathering accessories

Incident Analysis Resources:

Port lists

Documentation

Network diagrams and lists of critical assets

Current baselines

Cryptographic hashes

“Keeping the number of incidents reasonably low is very important to protect the business processes of the organization. It security controls are insufficient, higher volumes of incidents may occur, overwhelming the incident response team.”

So the best defense is:

Precursors

Web server log entries that show the usage of a vulnerability scanner.

An announcement of a new exploit that targets a vulnerability of the organization’s mail server.

A threat from a group stating that the group will attack the organization.

Indicators

Monitoring systems are crucial for early detection of threats.

These systems are not mutually exclusive and still require an IR team to document and analyze the data.

IDS vs. IPS Both are parts of the network infrastructure. The main difference between them is that IDS is a monitoring system, while IPS is a control system.

DLP Data Loss Prevention (DLP) is a set of tools and processes used to ensure that sensitive data is not lost, misused, or accessed by unauthorized users.

SIEM Security Information and Event Management solutions combine Security Event Management (SEM) – which carries out analysis of event and log data in real-time, with Security Information Management (SIM).

Regardless of the monitoring system, highly detailed, thorough documentation is needed for the current and future incidents.

“Containment is important before an incident overwhelms resources or increases damage. Containment strategies vary based on the type of incident. For example, the strategy for containing an email-borne malware infection is quite different from that of a network-based DDoS attack.”

An essential part of containment is decision-making. Such decisions are much easier to make if there are predetermined strategies and procedures for containing the incident.

“Evidence should be collected to procedures that meet all applicable laws and regulations that have been developed from previous discussions with legal staff and appropriate law enforcement agencies so that any evidence can be admissible in court.” — NIST 800-61

Holding a “lessons learned” meeting with all involved parties after a major incident, and optionally periodically after lesser incidents as resources permit, can be extremely helpful in improving security measures and the incident handling process itself.

“Digital forensics, also known as computer and network forensics, has many definitions. Generally, it is considered the application of science to the identification, collection, examination, and analysis of data while preserving the integrity of the information and maintaining a strict chain of custody for the data.”

The first step in the forensic process is to identify potential sources of data and acquire data from them. The most obvious and common sources of data are desktop computers, servers, network storage devices, and laptops.

Collection Identify, label, record, and acquire data from the possible sources, while preserving the integrity of the data.

Examination Processing large amounts of collected data to assess and extract of particular interest.

Analysis Analyze the results of the examination, using legally justifiable methods and techniques.

Reporting Reporting the results of the analysis.

Develop a plan to acquire the data Create a plan that prioritizes the sources, establishing the order in which the data should be acquired.

Acquire the Data Use forensic tools to collect the volatile data, duplicate non-volatile data sources, and securing the original data sources.

Verify the integrity of the data Forensic tools can create hash values for the original source, so the duplicate can be verified as being complete and untampered with.

A clearly defined chain of custody should be followed to avoid allegations of mishandling or tampering of evidence. This involves keeping a log of every person who had physical custody of the evidence, documenting the actions that they performed on the evidence and at what time, storing the evidence in a secure location when it is not being used, making a copy of the evidence and performing examination and analysis using only the copied evidence, and verifying the integrity of the original and copied evidence.

Bypassing Controls OSs and applications may have data compression, encryption, or ACLs.

A Sea of Data Hard drives may have hundreds of thousands of files, not all of which are relevant.

Tools There are various tools and techniques that exist to help filter and exclude data from searches to expedite the process.

“The analysis should include identifying people, places, items, and events, and determining how these elements are related so that a conclusion can be reached.”

Coordination between multiple sources of data is crucial in making a complete picture of what happened in the incident. NIST provides the example of an IDS log linking an event to a host. The host audit logs linking the event to a specific user account, and the host IDS log indicating what actions that user performed.

A case summary is meant to form the basis of opinions. While there are a variety of laws that relate to expert reports, the general rules are:

Deleted files When a file is deleted, it is typically not erased from the media; instead, the information in the directory’s data structure that points to the location of the file is marked as deleted.

Slack Space If a file requires less space than the file allocation unit size, an entire file allocation unit is still reserved for the file.

Free Space Free space is the area on media that is not allocated to any partition, the free space may still contain pieces of data.

It’s important to know as much information about relevant files as possible. Recording the modification, access, and creation times of files allows analysts to help establish a timeline of the incident.

| Logical Backup | Imaging |

|---|---|

| A logical data backup copies the directories and files of a logical volume. It does not capture other data that may be present on the media, such as deleted files or residual data stored in slack space. | Generates a bit-for-bit copy of the original media, including free space and slack space. Bit stream images require more storage space and take longer to perform than logical backups. |

| Can be used on live systems if using a standard backup software | If evidence is needed for legal or HR reasons, a full bit stream image should be taken, and all analysis done on the duplicate |

| May be resource intensive | Disk-to-disk vs Disk-to-File |

| Should not be use on a live system since data is always chaning |

Many forensic products allow the analyst to perform a wide range of processes to analyze files and applications, as well as collecting files, reading disk images, and extracting data from files.

“OS data exists in both non-volatile and volatile states. Non-volatile data refers to data that persists even after a computer is powered down, such as a filesystem stored on a hard drive. Volatile data refers to data on a live system that is lost after a computer is powered down, such as the current network connections to and from the system.”

| Volatile | Non-Volatile |

|---|---|

| Slack Space | Configuration Files |

| Free Space | Logs |

| Network configuration/connections | Application files |

| Running processes | Data Files |

| Open Files | Swap Files |

| Login Sessions | Dump Files |

| Operating System Time | Hibernation Files |

| Temporary Files |

Other logs can be collected depending on the incident under analysis:

The file systems used by Windows include FAT, exFAT, NTFS, and ReFS.

Investigators can search out evidence by analyzing the following important locations of the Windows:

Recycle Bin

Registry

Thumbs.db

Files

Browser History

Print Spooling

Linux can provide an empirical evidence of if the Linux embedded machine is recovered from a crime scene. In this case, forensic investigators should analyze the following folders and directories.

OSs, files, and networks are all needed to support applications: OSs to run the applications, networks to send application data between systems, and files to store application data, configuration settings, and the logs. From a forensic perspective, applications bring together files, OSs, and networks. — NIST 800-86

Certain of application are more likely to be the focus of forensic analysis, including email, Web usage, interactive messaging, file-sharing, document usage, security applications, and data concealment tools.

“From end to end, information regarding a single email message may be recorded in several places – the sender’s system, each email server that handles the message, and the recipient’s system, as well as the antivirus, spam, and content filtering server.” — NIST 800-45

| Web Data from Host | Web Data from Server |

|---|---|

| Typically, the richest sources of information regarding web usage are the hosts running the web browsers. | Another good source of web usage information is web servers, which typically keep logs of the requests they receive. |

| Favorite websites | Timestamps |

| History w/timestamps of websites visited | IP Addresses |

| Cached web data files | Web browesr version |

| Cookies | Type of request |

| Resource requested |

Overview

“Analysts can use data from network traffic to reconstruct and analyze network-based attacks and inappropriate network usage, as well as to troubleshoot various types of operational problems. The term network traffic refers to computer network communications that are carried over wired or wireless networks between hosts.” — NIST 800-86

These sources collectively capture important data from all four TCP/IP layers.

“When analyzing most attacks, identifying the attacker is not an immediate, primary concern: ensuring that the attack is stopped and recovering systems and data are the main interests.” — NIST 800-86

Binary code represents text, computer processor instructions, or any other data using a two-symbol system. The two-symbol used is often “0” and “1” from the binary number system.

Adding a binary payload to a shell script could, for instance, be used to create a single file shell script that installs your entire software package, which could be composed of hundreds of files.

Advanced hex editors have scripting systems that let the user create macro like functionality as a sequence of user interface commands for automating common tasks. This can be used for providing scripts that automatically patch files (e.g., game cheating, modding, or product fixes provided by the community) or to write more complex/intelligent templates.